The PostgreSQL Vector Store node connects your pipeline to a PostgreSQL database, leveraging the pgvector extension for powerful vector search capabilities.

Key capabilities

- Stores high-dimensional vector embeddings within a standard PostgreSQL database.

- Utilizes the pgvector extension to perform efficient similarity and distance searches (ANN).

- Supports standard PostgreSQL tables, allowing vector data to be stored alongside structured metadata.

- Enables durable and scalable vector storage by leveraging PostgreSQL’s robust, transaction-safe architecture.

Configuration

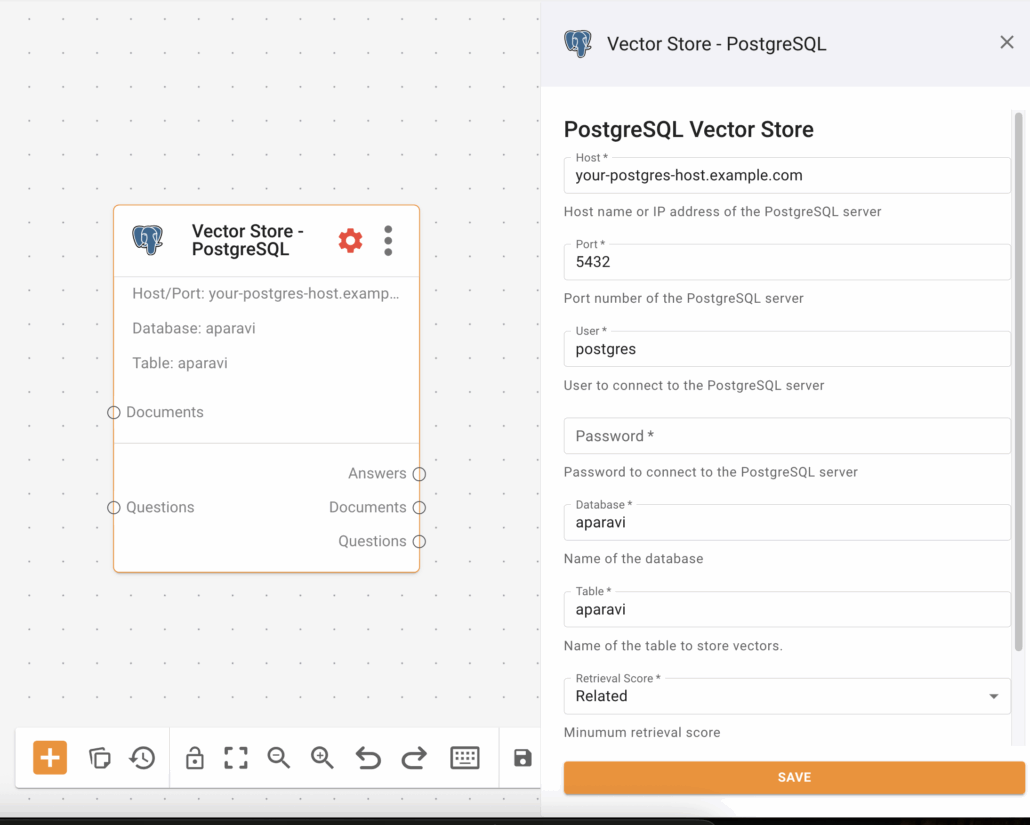

Basic Configuration

- Host: The hostname or IP address of the PostgreSQL server.

- Example – your-postgres-host.example.com

- Port: The port number for the PostgreSQL server connection.

- Example – 5432

- User: The username for database authentication.

- Example – postgres

- Database: The name of the database to connect to.

- Example – aparavi

- Table: The name of the database table where vectors will be stored.

- Example – aparavi

- Retrieval Score: The minimum similarity score (from 0.0 to 1.0) for a result to be considered relevant.

- Example – 0.5

Model Selection

- Similarity Metric: Choose the algorithm for comparing vector similarity. cosine is recommended for embeddings generated by transformer models. l2 (Euclidean) and inner_product are also available for other use cases.

API Key

- Password: Enter the password associated with the specified User for database authentication. This is not an API key but is required for access.

Inputs and Outputs

Input Channels

- documents: Receives vectorized documents to be stored. The input format is JSON objects containing the vector embedding and any associated metadata. While PostgreSQL has high limits, for best performance, input from the embedding model should not exceed 8192 tokens.

- questions: Receives vectorized queries for searching similar documents. The input format is a JSON object containing the query vector.

Output Channels

- documents: Emits the full document information retrieved from PostgreSQL for the best-matching results. The format is a stream of JSON objects.

- answers: Returns a condensed set of results containing vectors and metadata based on the retrieval score. The format is a stream of JSON objects.

- questions: Forwards the original incoming query vector downstream for logging or further processing. The format is the original JSON object.

Supported Model Variants

| Model Variant | Description | Max Tokens | Optimized for |

|---|---|---|---|

| cosine | Cosine distance. Measures the cosine of the angle between two vectors. | N/A | Semantic similarity with transformer-based embeddings. |

| l2 | L2 distance (Euclidean distance). Measures the straight-line distance between two vectors. | N/A | Image or feature similarity where magnitude matters. |

| inner_product | Negative inner product. Can be faster but requires normalized vectors for accurate results. | N/A | Maximizing performance with normalized embeddings. |

Data Flow Process

- Request Handling: Input documents or questions arriving on their respective channels are received as JSON objects. The connector transforms these objects into SQL statements compatible with the pgvector extension. For new documents, this is an INSERT statement. For queries, this is a SELECT statement with a vector similarity search clause (e.g., ORDER BY embedding query_vector).

- Response Handling: The results of the SQL query are retrieved from the database. The connector formats these database rows back into JSON objects and emits them on the appropriate output channel (documents or answers).

- Connection Management: The connector manages a connection pool to the PostgreSQL database to handle requests efficiently and concurrently.

Common Use Cases

- Semantic Search: Find documents conceptually similar to a user’s query rather than just matching keywords. Wire the pipeline as: Source Connector -> Embedding Model -> PostgreSQL Vector Store.

- Retrieval-Augmented Generation (RAG): Provide relevant context to a Large Language Model (LLM) to improve answer quality and reduce hallucinations. Wire the pipeline as: Source Connector -> Embedding Model -> PostgreSQL Vector Store -> LLM.