What does it do?

Key Capabilities

How do I use it?

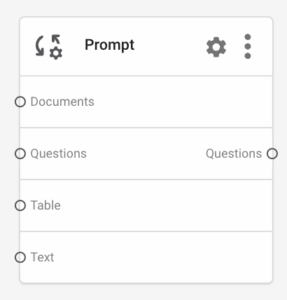

Add the Prompt Connector

- Insert the node between your data sources and LLM connector

- Configure your prompt template with placeholders for variables

- Define default values for optional variables

- Connect input channels for questions and optional documents

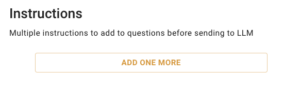

Configure Parameters

Configure Input and Output Channels

Data Flow Process

The Prompt connector follows a systematic data processing approach:

1. Input Collection

Action: Collect inputs from questions and optional documents channels

Purpose: Gather all data needed for prompt construction

Process: Read configured template and extract variables from inputs

2. Prompt Rendering

Action: Substitute variables and build complete prompt

Purpose: Transform template and data into executable prompt

Process: Apply variable substitution, attach system instructions, include examples or documents, apply output-format hints

3. Output Emission

Action: Emit single prompt message on questions channel

Purpose: Provide ready-to-execute prompt for LLM consumption

Process: No batching unless pipeline upstream splits inputs

Common Use Cases

Few-shot Prompting

Pipeline: Chain Prompt → LLM

Solution: Provide examples and variables in the Prompt node and send to the LLM node

Result: Enhanced LLM performance through example-based learning

RAG Prompting

Pipeline: Vector Store → Prompt → LLM

Solution: Feed retrieved documents into the Prompt node to build context-rich prompts

Result: Knowledge-augmented responses with retrieved context

Structured Output Generation

Pipeline: Data Source → Prompt → LLM → JSON Parser

Solution: Include JSON formatting instructions in prompt template

Result: Consistent, structured LLM outputs for downstream processing

Dynamic Question Answering

Pipeline: User Input → Prompt → LLM

Solution: Use variable substitution to customize prompts per user query

Result: Personalized responses with consistent prompt structure

Best Practices

Template Design

- Use clear variable names with descriptive placeholders

- Define default values for optional variables to prevent errors

- Structure prompts with clear system, user, and assistant sections

- Include specific output format instructions when structured responses are needed

Variable Management

- Validate required variables are provided in input data

- Use meaningful defaults that won’t break downstream processing

- Consider variable length limits for target LLM context windows

- Test with edge cases like empty or very long variable values

Context Optimization

- Monitor total prompt length to stay within model limits

- Prioritize most relevant documents when context is limited

- Use document chunking strategies for large context requirements

- Include retrieval metadata to help LLM understand document relevance

Technical Details

Variable Substitution Process

- Template Parsing: Identify all variable placeholders in template

- Input Mapping: Match input data fields to template variables

- Default Application: Apply configured defaults for missing variables

- Substitution: Replace placeholders with actual values

- Validation: Check for any remaining unresolved variables

Document Integration

- Documents are injected into designated template sections

- Multiple document handling with separators and numbering

- Automatic truncation if context limit is approached

- Metadata preservation for document source tracking

Error Handling

- Missing required variables trigger structured error outputs

- Template syntax errors are caught during configuration

- Context length violations generate warnings

- Invalid JSON in input data produces descriptive error messages

Frequently Asked Questions

Authentication Errors

Not applicable → API keys are managed by the downstream LLM connector.

Missing variable in template

Define a default in the template or pass the variable on the questions input.

Prompt too large for target model

Reduce included context or chunk upstream before Prompt.

Downstream LLM returns unstructured output

Add explicit JSON instructions in the Prompt template and validate downstream.

Hallucinations in answers

Strengthen system prompt and include retrieval context via documents input.

Expected Results

After using the Prompt connector in your pipeline:

- Well-formed Prompts: Consistently structured prompts ready for LLM execution

- Dynamic Content: Variable substitution enables personalized and context-aware prompts

- Enhanced Performance: Few-shot examples and clear instructions improve LLM output quality

- Structured Outputs: Format instructions guide LLMs to produce parseable responses

- Error Prevention: Validation and defaults prevent downstream processing failures